Finally I have a homelab to support my study on VCAP-DCA and my job. My current work often requiring me to test a complex upgrade or a complex setup.

VMware Hands-on Lab and

VMware Product Walkthrough are great to learn how to configure specific features. But not all products and features available and the lab does not provide walkthrough from scratch - for example installing ESXi host, vCenter Server, etc.

It was not easy for me because I need to submit and present to my CFO - my wife,

WAF (Wife Acceptance Factor) is one of the design factors that need to be considered plus there are also some technical constraints. I can only submit a Purchase Order after the BoQ has been approved by CFO.

- Total budget is $2,000USD

- Low watt. Apartment's maximum watt is 1,300W, there are fridge, aircons, TV, lights, etc. We are looking at lab that draws up to 200W max 300W.

- Compact and small form factor. Apartment's size is only 33m2, there is not much free space left.

Looking at above lists, I was not sure if I can get a homelab that can meet above requirements and constraints so I look for a cloud that can provide a lab with bunch of VMs with nested ESXi. I found

ravello systems but I'm not sure if I can rely online labs and still prefer physical labs.

I was visiting

Jason Langer's blog and read his post on

replacing homelab with ravello systems.

I'm interested in the Physical Design lab on

Micro-ATX w/

Lian-Li Case and

Intel NUC. The Micro-ATX based lab can provide 32GB RAM per host, but the power supply is 400W. So I need to go with Intel NUC w/ 16GB RAM.

I was planning to get a small 4-bays storage for SOHO/SMB like Synology DS415+ but it is quite expensive. Alternative for 4-bays in Synology w/ lowest price is DS414slim. But again still quite expensive and I'm not sure if the total budget is sufficient. So I decided to go with

VMware VSAN.

To build a VSAN with NUC, I will need NUC that support multiple disks (1 SSD and 1 HDD). Apparently there are a lot of model for NUCs and I wanted something that can run vSphere 6.

Florian Grehl's has an article on

ESXi 6.0 Image that works with NUC. Most of local electronic/IT shops here sell the complete set NUC and in the end the memory & disks will be useless since I will replace it to 16GB RAM and at least 120GB SSD + 1TB HDD. NUC with

Intel vPro is cool because it provides a KVM Remote Control. I agree with

Mike Tabor as he pointed on his article

Intel NUC i5 5th Generation an ESXi lab improvement, the best suite would be NUC5i5MYHE - 5th Gen Processor, 2 internal disks, and Intel vPro. So I contacted a local Intel's distributor to ask for a BoQ on NUC5i5MYHE / D54250WYKH2 / NUC5i5RYH, they only sell Intel stuff so I need to buy memory, disks, etc from different shops and build my own. The total cost is above $2K and it is quite troublesome to purchase from different shops, one of the risk the warranty and support are separate and there could be compatibility issue if I'm not picking up the correct brand and model.

I was looking for alternatives for Intel NUC and

Shuttle DS81 looks promising.

Below are some links that has Shuttle DS81 for homelabs

Ultrasmall computers for your VMware lab - Intel NUC and Shuttle DS81 preview:

https://www.youtube.com/watch?v=ullzf3TqhoE

The Perfect vSphere 6 Home Lab | Ryan Birk – Virtual Insanity:

http://www.ryanbirk.com/the-perfect-vsphere-6-home-lab/

Build a new home lab VMGuru:

https://www.vmguru.com/2015/02/build-new-home-lab/

Building a ESXi 5.5 Server with the Shuttle DS81:

https://globalconfig.net/building-esxi-5-5-server-shuttle-ds81/

It can runs vSphere 6 only need to inject Realtek 8111G VIB drivers to ESXi 6 image with

PowerCLI 6 or using

v-Front ESXi-Customizer by Andreas Peetz. The drivers can be found from one of the above links on Shuttle DS81 for homelabs. Although it is slightly bigger than Intel NUC, the advantages of Shuttle DS81 are it comes with a dual-NIC GigabitEthernet and processor speed is higher than the NUCs. I decided to order 3xShuttle DS81 from local distributor with the following hardware specifications:

Intel® Core™ i3-4170 Processor (3M Cache, 3.70 GHz)

Kingston 8GB 1600MHz DDR3 (PC3-12800) SODIMM Memory

Samsung 850 EVO 120 GB mSATA 2-Inch SSD

HGST Travelstar 7K1000 2.5-Inch 1TB 7200 RPM SATA III 32MB Cache Internal Hard Drive SanDisk 16GB Class 4 SDHC Memory Card

For the switch, I start with

TP-Link 8-Port Gigabit Easy Smart Switch TL-SG108E first. It support VLANs, IGMP Snooping, Link Aggregation, and its low watt. I also buy a small UPS/AVR

CyberPower BU600E. I use an

energy/watt meter to validate all of the above configurations as the CFO would very much like to validate herself. For all 3 nodes Shuttle DS81 + 8-Port GbE TP-Link switch + UPS, it turns out that the total wattage are only between ~65W up to 160W :)

To install ESXi 6 on USB/SD Card, we can install it to USB/SD as a destination directly (with Workstation/Fusion) or have it as a source installer. You can read the details in

Vladan's post here. To create ESXi 6 bootable ISO along with automatically using a static IP Address when the custom ISO first boots up, we can use a ks.cfg, read more about it in

William Lam's post here or you can also create

kickstart for VSAN. I'm using SD card for the ESXi boot ISO but unfortunately VMware Fusion cannot detect

Mac's Internal SD card reader. So I will need to create an ESXi installer on USB. Then install to SD card using USB. After the ESXi hosts are ready, I cannot install vCenter because there is no VMFS Datastore and we need a vCenter Server to configure a VSAN. There's an article on

how to bootstrap a VCSA to a single VSAN node. Since I'm using Mac, I cannot use the

Client Integration Plug-In to deploy VCSA and need to use the vcsa-cli-installer. To install using vcsa-cli, read

Romain Decker's article here.

At the moment, I only install VCSA 6.0u1 with Windows 2003 as AD, DNS, DHCP. I used Win 2003 because of it small size and hardware specification/requirements. With 3 nodes of 120 GB SSD & 1TB HDD I get 2.7TB VSAN datastore in total as below screenshot.

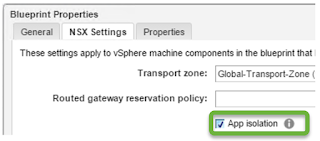

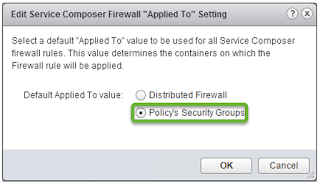

I'm planning to continue to install NSX 6.2 by following

Thomas Beaumont's blog post here.